In 2015, as students in Bihar were taking their school leaving grade ten examinations, family members and friends scaled school walls to pass cheat sheets to the exam takers. Police at the examination venue had received bribes to look the other way.

The Bihar incident is not an isolated case. Cheating scandals are all too common across both developing and developed countries. Scores on high-stakes exams can determine a child’s future through access to better education opportunities and career possibilities—this triggers pressures to perform well. This performance pressure can lead to intense studying, a market for tutoring and exam preparation, and, in the worst instances, widespread cheating that can involve students, parents, teachers and officials.

There are other risks of high stakes examinations. When the examination itself does not demand learning or understanding, high performance can be achieved through rote memorization, promoting memorization over meaningful learning. In addition, test-based accountability systems could incentivize teachers to shift focus from academically disadvantaged students to more advanced students (Neal & Schanzenbach, 2008).

The Role of Assessments as an Accountability Tool

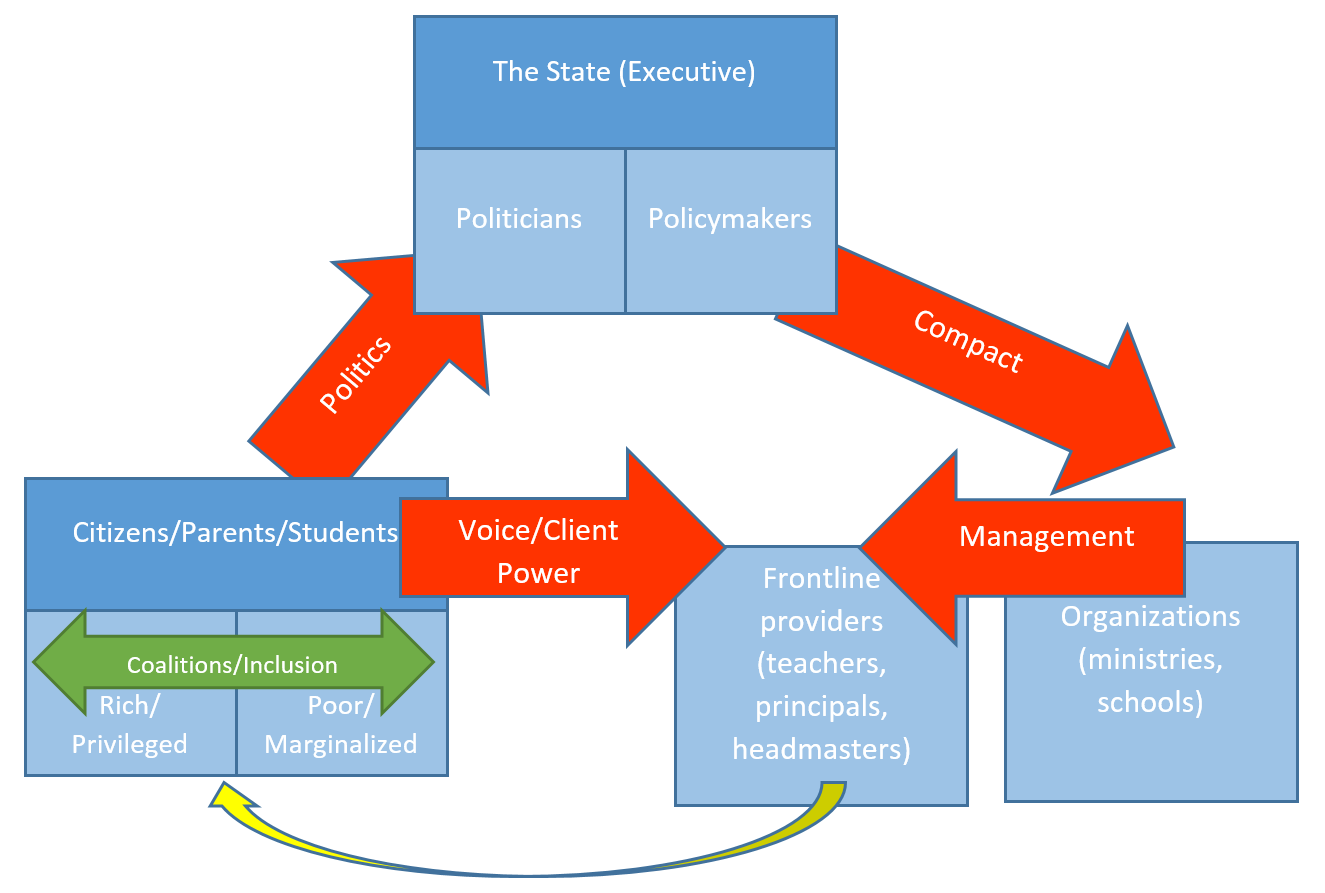

The World Development Report (WDR) 2004 maps out the framework of accountability relationships between clients, providers and policymakers in the delivery chain for services such as education, water, electricity, health, etc. A more granular view of the accountability relationships between various actors in an education ecosystem is shown below:

Source: Pritchett, L. (2015). Creating Education Systems Coherent for Learning Outcomes.

Information through examinations is a key component of the accountability relationships between various actors, as it helps them make decisions about the adequacy of performance. Poor information leads to poor accountability.

But, there are two common defects in information.

One risk is no regular reliable information at all on learning but lots of information on easily observable inputs that may or may not be strongly related to learning. Rather than collecting information about learning gains (or lack thereof), states and ministries mostly collect information on process compliance, such as attendance, budget allocation and inputs. When ministries and states make decisions about learning without information on learning, the accountability relationships fail to be aligned with learning.

In the absence of good information on learning, actors do not have accurate information about learning to base their actions on. Or actors may judge education quality based on proxies that may hold no relationship with quality of learning. For example, a teacher’s ability to teach may be judged by seniority or formal qualifications alone, which have proven to be inadequate indicators of student learning. In essence, poor testing leads to poor information, and ultimately, poor accountability relationships.

In particular, the lack of learning assessment information strongly affects the relationship between the parents/students and the state (politics) and the parents/students and teachers (client power). Without accurate information about the child’s learning progress, citizens may lack the impetus or ability to pressure the system to deliver better results.

The other risk involves testing that is ill-designed to assess desired learning and that is high stakes for the student.

Recognizing the case for why good testing is important to build strong accountability relationships, Newman Burdett emphasizes how testing is critical to improve education outcomes in a recent RISE working paper. The paper emphasizes how assessment and measurement is necessary to gain information about learning outcomes and to make decisions about improving the quality of education systems.

What is a Good Assessment?

Newman classifies assessments into three broad categories:

|

Monitoring assessments |

Produce information at the national and regional level, not necessarily at the individual level, through long-term feedback loops. These can be national (e.g. SAEB or Prova Brasil in Brazil, ENLACE in Mexico) or internationally/regionally comparable assessments (e.g. PISA, TIMSS, SACMEQ). Most have sparse geographical coverage or the existing assessments do not provide comparable outcomes (Sandefur, 2016). |

|

End-of-cycle or school leaving assessments |

High stakes examinations influencing further education choices and employment. Examples include end of primary exams, school certificate exams, end of “high school” examinations that regulate entry to college/university (and which subjects can be studied). |

|

Formative assessments |

Day-to-day assessments providing dynamic feedback to improve learning through short-term feedback loops. |

Newman Burdett’s paper advances some ideas for how to create good assessments. Good assessments must have a clearly defined purpose, as multiple purposes lead to conflicts. Past experience has shown that using the same assessment to monitor progress towards national standards of learning and for high stakes teacher accountability can lead to conflicting tensions. There are the risks of both Campbell’s law: “The more any quantitative social indicator is used for social decision-making, the more subject it will be to corruption pressures and the more apt it will be to distort and corrupt the social processes it is intended to monitor;" and Goodhart’s law: “When a measure becomes a target, it ceases to be a good measure.”

The assessments must also measure what learners are intended to learn. Furthermore, assessments should be reliable (will we get the same result if we repeat the assessment?) and valid (does the assessment measure what it claims to measure?)

Well-designed, reliable and valid assessments can provide evidence of student effort and student learning. They can offer information about what teachers teach and fail to teach. The experience of the United States with the high stakes for the schools testing as part of the “No Child Left Behind” legislation has led to a large literature on the dangers of too much high stakes testing. But in many developing countries the challenge is that the education system only has information on inputs (as part of EMIS systems) and high stakes for the student examinations. There is very little of either assessments for monitoring the learning performance in the system (which can be low stakes for teachers and schools as it can be sample based) or of formative assessments that help the local, school, and teachers enhance actions that lead to effective learning. If education systems are to shift from a focus on inputs to learning outcomes, a focal point driving RISE research, then learning outcomes need to be monitored through meaningful assessments that are regular, reliable and valid tools for measuring learning.

The complete RISE working paper, “The Good, the Bad, and the Ugly: Testing as a Key Part of the Education Ecosystem,” by Newman Burdett can be found on the RISE website.

This is one of a series of blog posts from “RISE"—the large-scale education systems research programme supported by the UK’s Department for International Development (DFID) and Australia’s Department of Foreign Affairs and Trade (DFAT). Experts from the Center for Global Development lead RISE’s research team.

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.