Recommended

If you were to ask most ministers or agency heads whether aid effectiveness matters, they will surely give a resounding “yes.” But if you were to ask them which measures they report systematically to their board, then their commitment would suddenly be less solid. So, in a new paper we propose a suite of 17 international agency-level indicators that we think provide a sound, evidence-based and balanced framework for measuring the “Quality of Official Development Assistance” (QuODA) between countries and agencies.

Are countries committed to aid effectiveness?

In a series of meetings spanning the last two decades, countries have repeatedly committed to principles around development cooperation to ensure its effectiveness, including as part of the 2030 Agenda, SDG 17 to: “Strengthen the means of implementation and revitalize the global partnership for sustainable development.”

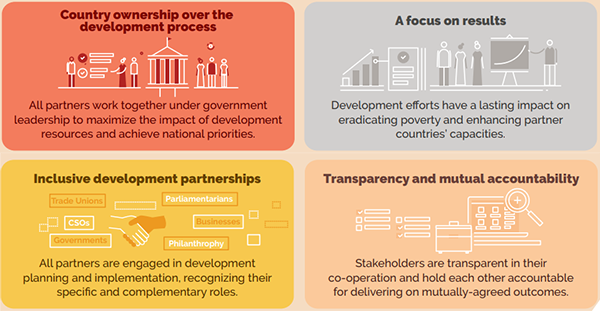

These commitments are now brought together under four principles covering Ownership, Results, Partnerships, and Transparency, currently monitored by the Global Partnership for Effective Development Cooperation (GPEDC):

Agreed principles of effective aid:

Source: Adapted from GPEDC at a glance

OECD-countries’ focus on effectiveness—at least in terms of the above principles—appears to be waning. Political and senior aid management attention has shifted away from this topic since the landmark international meetings on aid effectiveness of the first decade of this millennium, which also underpinned a surge in aid by traditional donors. While GPEDC has broadened its scope to new countries and sectors, the focus on aid effectiveness in high-income countries has reduced.

GPEDC’s survey is an important tool for assessing recipients’ views of aid providers, but even for OECD-DAC providers only a minority—averaging one in five—of recipient countries responded.

On indicators that are compiled, quality may well be declining—but GPEDC shies away from highlighting agency and provider country performance. Last July it released its aggregate 2018 results, which went almost unnoticed, despite the fact that several important indicators, such as transparency and use of public finance systems, are flat-lining. Some of these include. Several others are regressing, including: predictability, the use of recipient country results frameworks, and funds subject to parliamentary scrutiny in recipients.

Source: GPEDC Global Partnership 2019 Progress Headlines Report

Revising the measures of aid effectiveness

These broad principles of development effectiveness probably still make sense, however their monitoring by GPEDC no longer moves the needle on “provider” behaviours. In fact, the framework does not investigate what aid providers actually prioritise spending their money on, in terms of countries, purposes, and channels.

Moreover, we need to think more about how the principles apply in a changed global context. In particular, how should we adapt these principles for increasingly important fragile country contexts—contexts where we now estimate that 400 million of the 450 million who remain extremely poor people will live by 2030? And how do we assess development aid spent on global challenges or “Global Public Goods,” such as climate change mitigation, which may not be most effectively tackled in the poorest countries?

On the positive side, in some areas we have better information than we did a decade ago, when our colleagues Nancy Birdsall and Homi Kharas at the Brookings Institute developed the original QuODA. The original was designed to start tracking aid quality, in the specific sense of behaviours largely within aid providers’ control that are credibly associated with greater development impact. For example, from peer reviews on the quality of agencies’ learning from results on the ground. It also enables us to get underneath some headline commitments, such as greater transparency or aid untying, and look deeper at how well these are implemented in practice.

Finally, a decade of empirical research on development—which we document in the report—has knocked on the head some initial intuitions, fashions, and theories about aid effectiveness. The hardest ship to abandon for many in the aid “industry” has arguably been the proposition that aid works best in “well-governed” country contexts, as defined by some fixed set of ratings of the quality of country institutions and policies. Unfortunately, this idea is no longer clearly supported by cross-country evidence, conflicts with experience from fragile contexts in particular, and ignores the lesson that “good” policies are not unambiguously defined, but instead tend to be context-specific and path-dependent. We must adapt aid effectiveness measures accordingly.

Quality of ODA

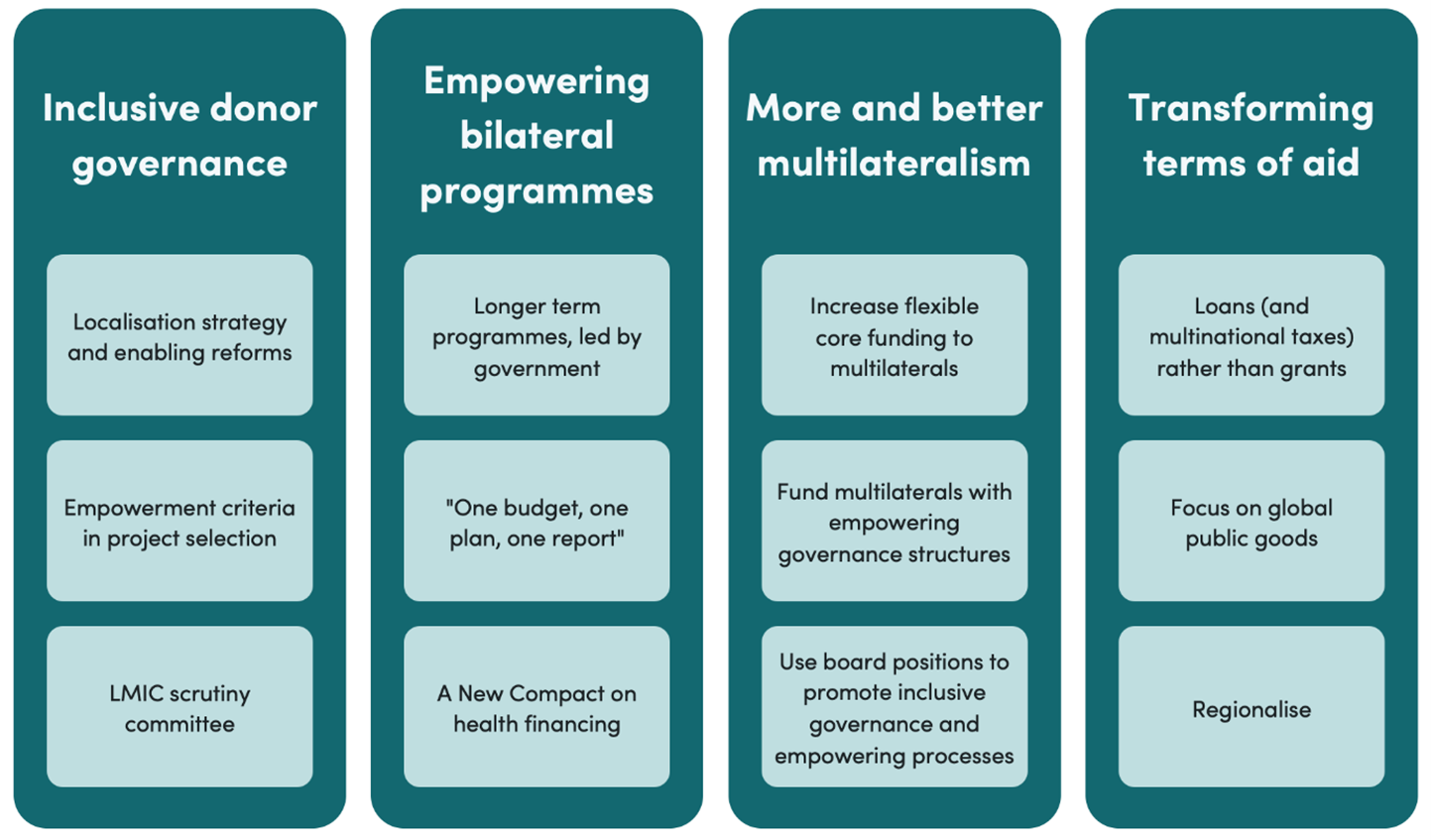

We have kept a few of the core indicators derived from GPEDC, and combined them with a battery of others, grouped under the following 4 themes of good aid:

1 Prioritisation

2 Ownership

3. Transparency

4. Learning

The full framework is summarised in the linked table. Several of these indicators will need further work to gather and calculate (see our blog on Evaluation and Learning) and, with Rachael Calleja, we hope to complete that work later this year.

In the meantime, reactions and suggestions, and ideas for future collaboration on this framework are welcome! Please do get in touch.

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.