The Demographic and Health Surveys (DHS) sponsored by USAID are possibly the single biggest source of public health information in the developing world. While they focus on health and fertility issues, they’re also an important source for education statistics. In particular, the standard DHS questionnaire used in dozens of countries asks respondents to read a single sentence off a cue card, providing a valuable, direct (i.e., not just self-reported) measure of literacy in places where no other reliable info exists. As I noted in a previous post, this data provides one of the few internationally comparable measures of the quality of girls schooling across a large number of poor countries. So three cheers for the DHS!

[Polite pause.]

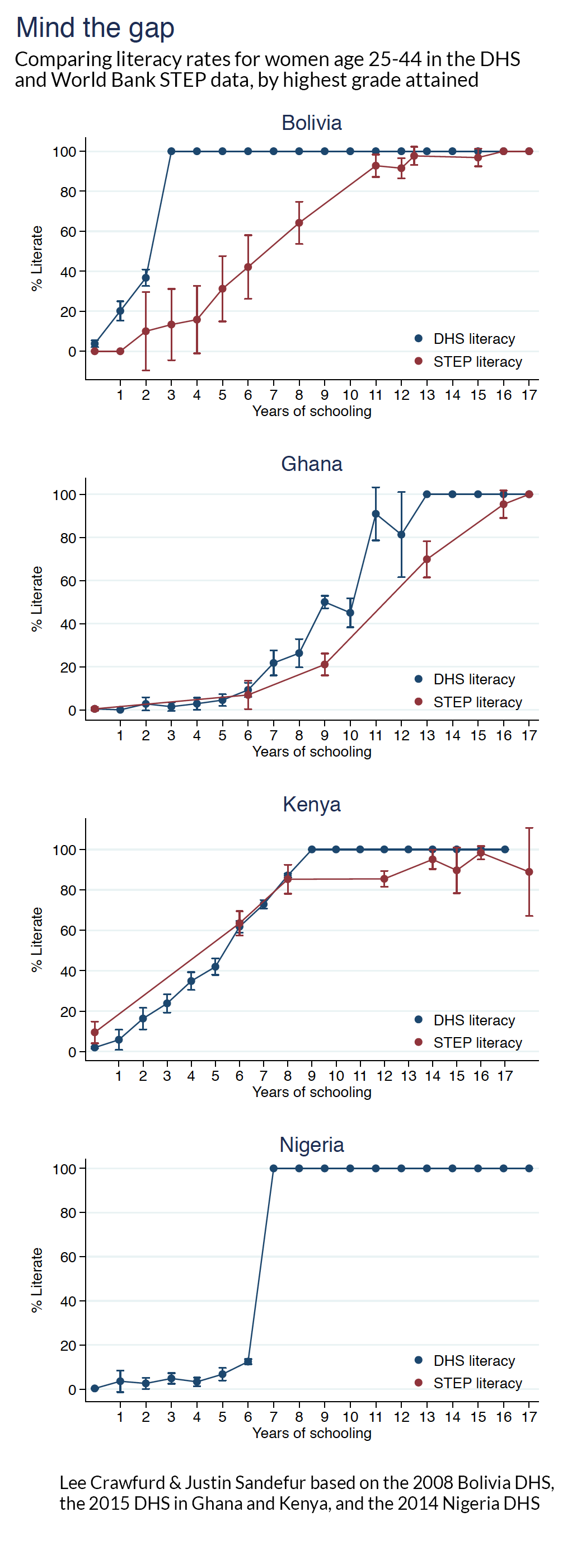

However… the value of the DHS literacy data is significantly undermined by a small, easily fixable flaw in the way the survey is administered. The result of this flaw, as I show below, is that the DHS significantly overstates literacy rates (and hence the quality of schooling) for large swaths of the world's population. The easy fix for this problem is a tiny tweak to the survey questionnaire.

The core of the problem is that the DHS doesn't test literacy for people who went to secondary school, and simply assumes they're literate. As it turns out, this is wildly optimistic. (To be clear, DHS is not being deceitful or misleading here, just explicitly choosing to give women who went to secondary school the benefit of the doubt.)

How do we know people who went to secondary school really aren't literate if the DHS doesn’t ask? I asked my former CGD colleague Lee Crawfurd of Ark what other data sources we could cross-reference, and he noted that the World Bank asks adults similar questions. The STEP Skills Measurement Program administers a battery of cognitive skills tests to a nationally representative sample of adult respondents in a dozen or so countries around the world, including three developing countries that also have DHS literacy data.

What we found when we compared two data sources

For comparability, Lee and I looked at similar demographic samples across both surveys: here we use women age 25 to 44 at the time of the survey with anywhere from zero to eighteen years of schooling, scored by whether they could read a single sentence. (We focused on women because we were writing a paper about girls' schooling, but this could be repeated for men in countries where DHS does a men’s survey.) Note that respondents in the DHS are given one sentence to read, while STEP records whether they could read any of the twenty sentences they were shown. The STEP survey was also administered between 2011 and 2013, while survey years for the DHS rounds used here range from 2008 to 2015, though it seems improbable this timing issue could explain the discrepancies we see.

The precise threshold varies by country for how much schooling you need before DHS stops asking the literacy question and just starts assuming. In most cases, enumerators skip the question if the respondent has gone beyond primary school. In some cases, the cutoff is lower (as in Bolivia, where DHS assumes you're literate if you made it to third grade), and in some cases it's higher (like in Ghana, one of only two countries we've found where DHS keeps asking the question through the end of senior secondary school).

As the graphs clearly show, assuming people are literate leads DHS to some dubious conclusions. Rather than 100 percent literacy, the PIACC test finds that the real literacy rate for Bolivian women with a third-grade education is probably under 20 percent. Granted, these discrepancies are not as stark in Ghana and Kenya. But even looking at Ghanaian women who left school after secondary, STEP’s actual literacy rates are about 70 percent rather than 100 percent. And there are many other countries without STEP data to prove the point where the sudden jump to 100 percent literacy in the DHS seems like a fairly severe problem.

Nigeria is an extreme case, where barely 10 percent of women who left school after sixth grade can read a single sentence according to the DHS, but those who went on to a seventh year are assumed to be 100 percent literate. Again, there's no STEP benchmark to compare to for Nigeria, but that leap seems improbable, if not arithmetically impossible.

As a side note, we should acknowledge that in theory it's possible that the admissions requirements are so strenuous for Nigerian secondary schools, that simply due to selection -- rather than any additional learning -- literacy rates really do jump up at secondary school. In practice, this doesn't seem to be the case. I emailed my colleague Lant Pritchett about this alternative explanation, since he's written a whole book about how "Schooling Ain't Learning", and he replied "empirically cannot be true." Simply look at the share of Nigerian students moving from grade six to seven (i.e. most). The number of students being winnowed out just isn't big enough to produce a literacy jump from 10 percent to 100 percent, and he sent me this graph. (Q.E.D.)

Luckily, this measurement problem has an easy fix

At a recent research workshop on the demographic effects of girls schooling, I was presenting econometric results using this DHS literacy data and struggling to explain the possible biases due to the truncation of the literacy variable. Ann Blanc of the Population Council helpfully pointed out that there was an obvious solution requiring no econometrics: ask DHS to remove the filter on the question. Just test everybody.

So that's my simple plea. During the last administration, Michelle Obama's slogan was to "Let all girls learn," which I obviously applaud. And as a first step toward taking that project seriously, I have a minor data request: "let all illiterate women (and men) be counted."

Update: After posting this, I learned via the hive mind of Twitter that the newest DHS questionnaire has already made (more or less) the change recommended at the end of this piece. See here for a June 1st DHS blog post unveiling the change to how literacy is measured, including data from Malawi which is the first country to field the new questionnaire. So while my worries about existing literacy measures remain, new data going forward should give us a more complete picture.

Disclaimer

CGD blog posts reflect the views of the authors, drawing on prior research and experience in their areas of expertise. CGD is a nonpartisan, independent organization and does not take institutional positions.